Did aI do that?

Not quite a post, more of a sharp inhale…

Coding assistants are improving. They’re not good, exactly. But the advances are astounding. SoTA is today, not six weeks ago. Yes, the leaderboards reflect that to some degree. However, the tools built on those LLMs are more effective in a way that seems outsized relative to LLM eval metrics.

The apprentice

Human-in-the-loop is still essential, and the human needs to have considerable expertise. Nudging the AI in the right direction when it strays off course requires both perceiving the deviation and having some sense of how to get back on track. It’s like taking on an apprentice — initially, you send them off to do things and are amazed by what they deliver. Then, as you refine their work to complete the remaining 20% or so, you sense it would be faster and the outcome more reliable if you just did it yourself.

Yes, aI did that! (sort of)

Part I

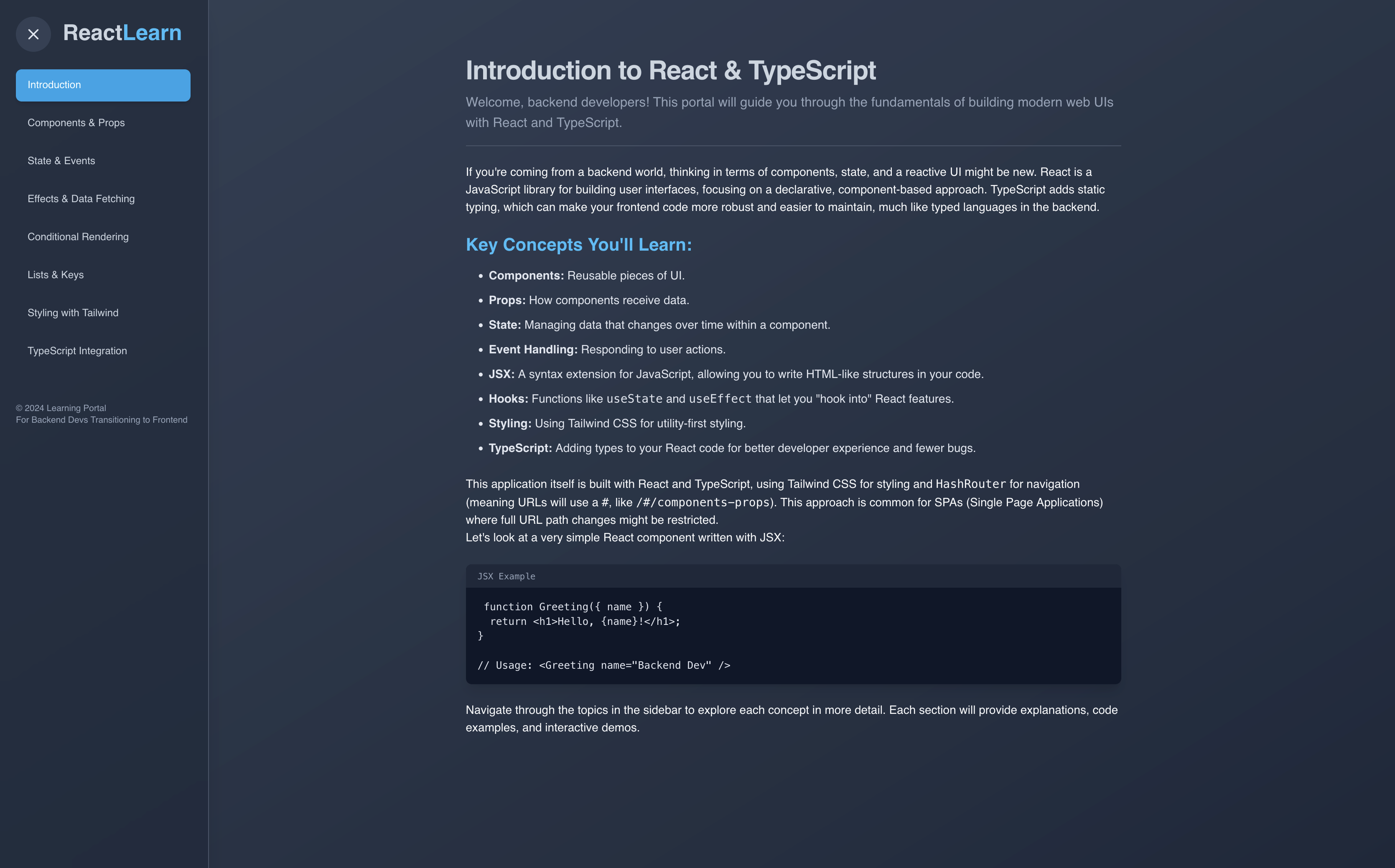

I recently had Google AI Studio build a simple website and generate the content. Aside from minor debugging and a few nudges on design, it produced an output well aligned with the prompt.

Part II

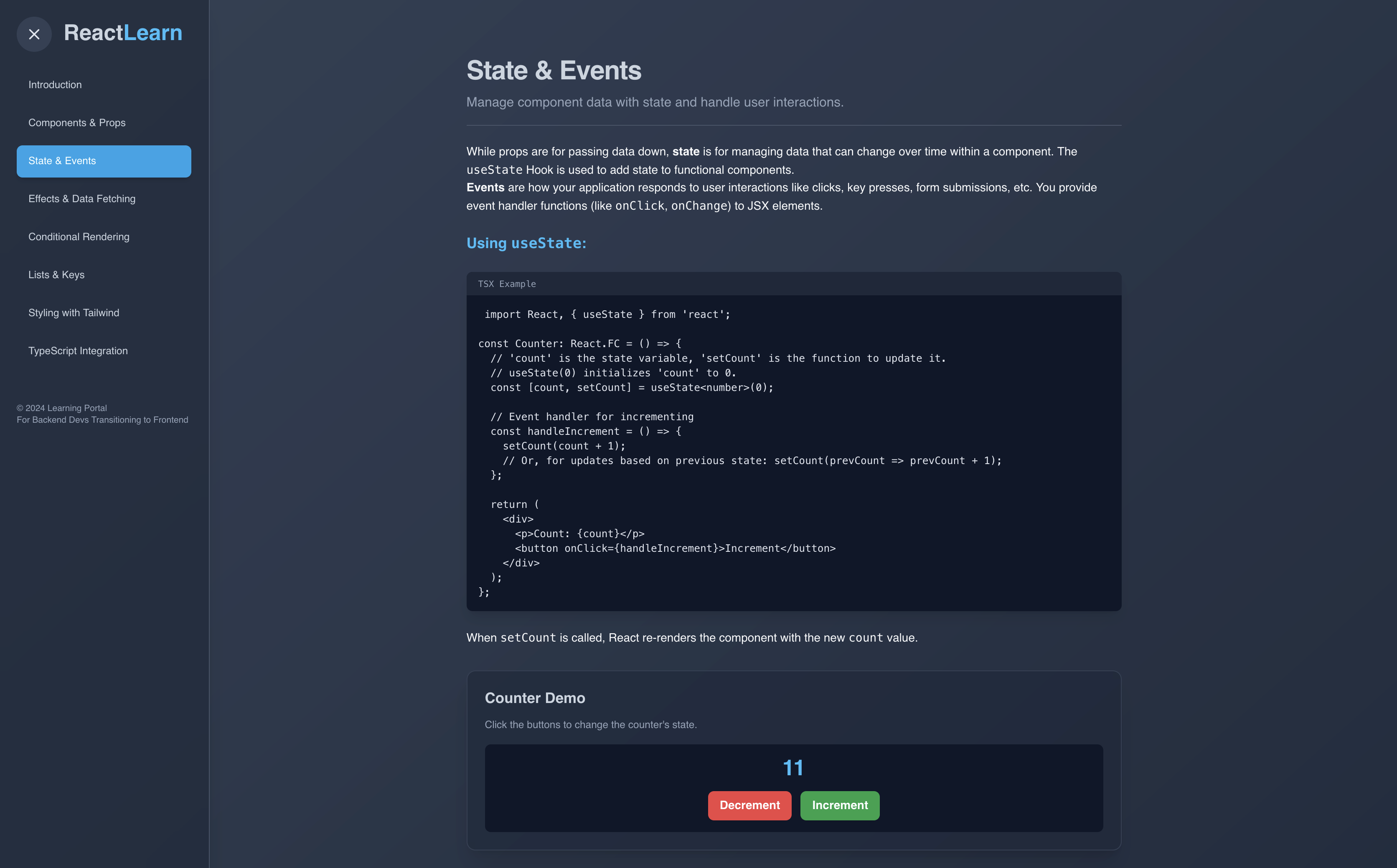

For a slightly more involved app, I used Lovable to build from an initial prompt: “Build an interactive game for guessing the artist on gradual reveal of artworks from MoMA’s open access collection. Use Next.js, React, Typescript, plus other components of your choosing.”

Lovable struggled with this more complex challenge. For comparison, I tried again with Cursor/Claude Sonnet 3.5 (literally the day before 4.0 was released!), same prompt. Given the non-determinism of LLM outputs, the stark differences between the two tools’ default design choices and the implementations of the interactive components were not unexpected. It was interesting that neither attempted to take a minimal, simplest-possible approach which would have increased the likelihood of the app just working out of the box.

Ultimately, a working prototype was not produced by either Lovable or Claude for this more complex challenge.

Greater expectations

As helpful as coding assistants may be, using them does detract from the craftsman-like experience of coding. For the software engineer who aims to fully and deeply understand the intricacies of the code, and to implement clean, best-practice solutions, there are drawbacks.

Like high quality writing, code benefits from an iterative approach — including, ideally, input from an editor. That process is likely to reveal possibilities for improvement which ought to be thoughtfully considered for not only their benefits, but for the broader impacts of implementation. With LLMs, that process seems to play out a little differently…

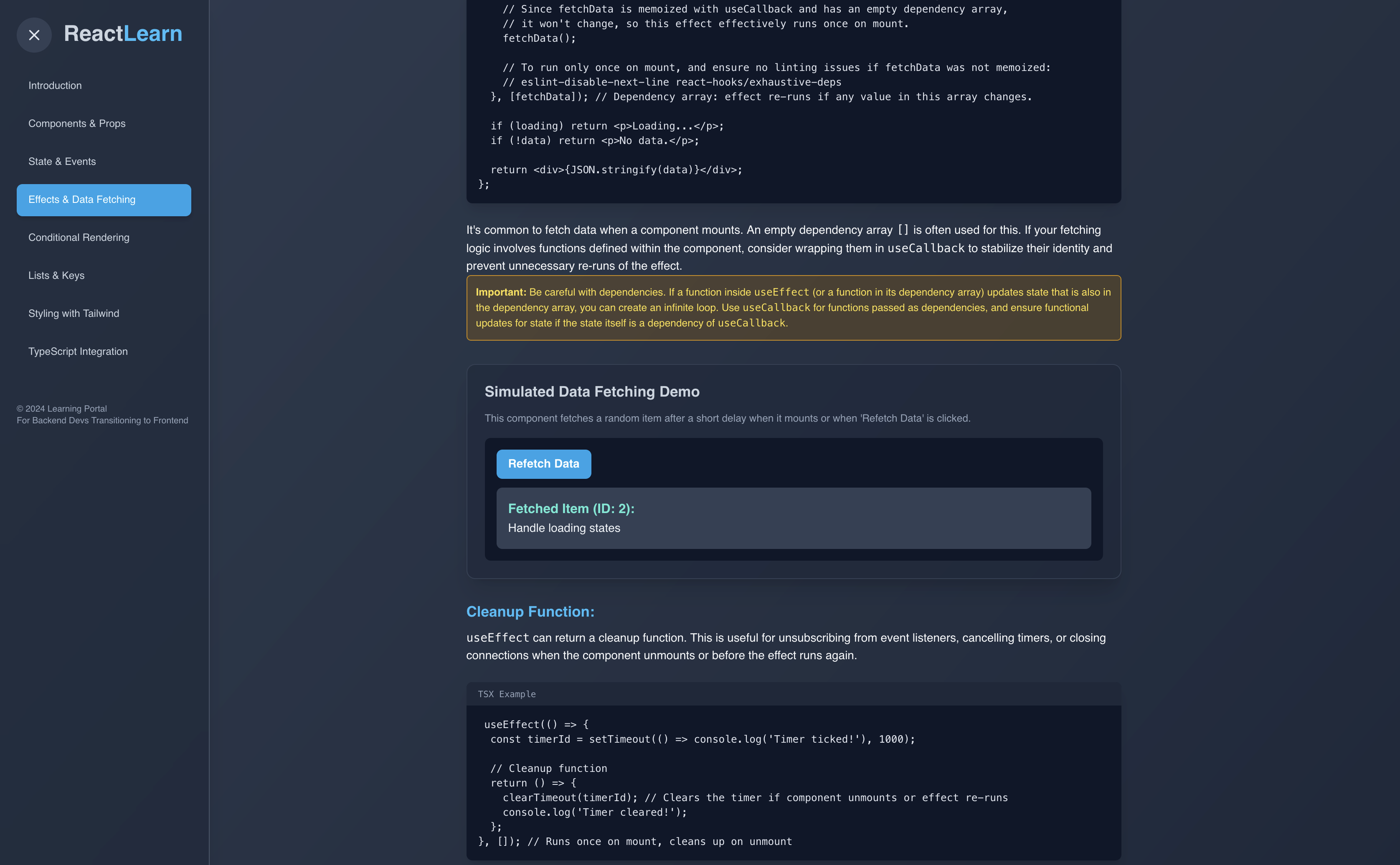

It’s been noted by others that successive iteration on a more complex challenge in a large code base can lead to LLM responses that seem disconnected from prior stages, sometimes ignoring stated requirements and constraints. (Recent study on this phenomenon, and Alberto Fortin’s take — After months of coding with LLMs, I'm going back to using my brain.) As an example, while building my artist-guessing-game app, the LLM received explicit instructions detailing the method by which to reveal the artwork. A few iterations in, the visual reveal method had deteriorated until it no longer worked at all, despite not being referenced in the iteration conversation.

Inspecting the AI-generated code as a good book editor might — with attention not only to every detail, but to the style and purpose of the work — is one approach, though unlikely to resolve the issue of each reasoning iteration ultimately having insufficient context. Another difficulty in taking on such an editorial role is that the LLM’s approach isn’t necessarily consistent across iterations.

Using an AI coding tool has some similarities to pair programming. Over time, human programmers develop a sense of their pair’s context, and anticipate input to some degree. The two contexts merge, creating a new context with its own scope. Although LLMs excel at adopting a conversational style that feels engaging and friendly, they don’t actually adopt a shared context, though, and their scope remains an extremely broad training set. The focus and boundaries that naturally arise with a familiar colleague don’t seem to emerge in the context of collaborating with an LLM.

Numerous write-ups of how to work effectively with AI coding assistants are available - here’s one, and here’s Terraform/Ghostty’s Mitchell Hashimoto sharing how he uses AI when coding. An interesting tactic is to show the AI what good code looks like, per your needs and codebase. Birgitta Böckeler shared the full details of using a coding agent for a relatively simple frontend task, and discusses her broader learnings in the Pragmatic Engineer newsletter.

Postscript

To be clear, I’m referring to working on new (toy) apps or scoped tasks. As a counterpoint, Jess Frazelle of Zoo noted that, using Graphite, their coding assistant learned both Zoo’s custom programming language and mechanical engineering principles. Such domain-tuned performance requires significant domain-specific context, though, putting some brackets around the set of use cases where AI coding tools (currently) do their best work.

Going back to the point about reviewing the details of LLM-generated code as an editor might — the overhead of gauging where the LLM is going with its solution on each pass is significant for anything other than the simplest tasks. That’s one key con. The main pro is that its responses are fast. Do those things balance out? Possibly.

A more subtle con, though, is that it becomes tempting to replace careful review with the move-fast-break-things approach: hope for the best, accept the LLM’s code, and feed error messages back to the LLM to resolve successively. Although… whether that’s a con may not be so cut and dry. After all, vibe coding is a thing.